Data box appliances and solutions for data transfer to azure and edge compute.

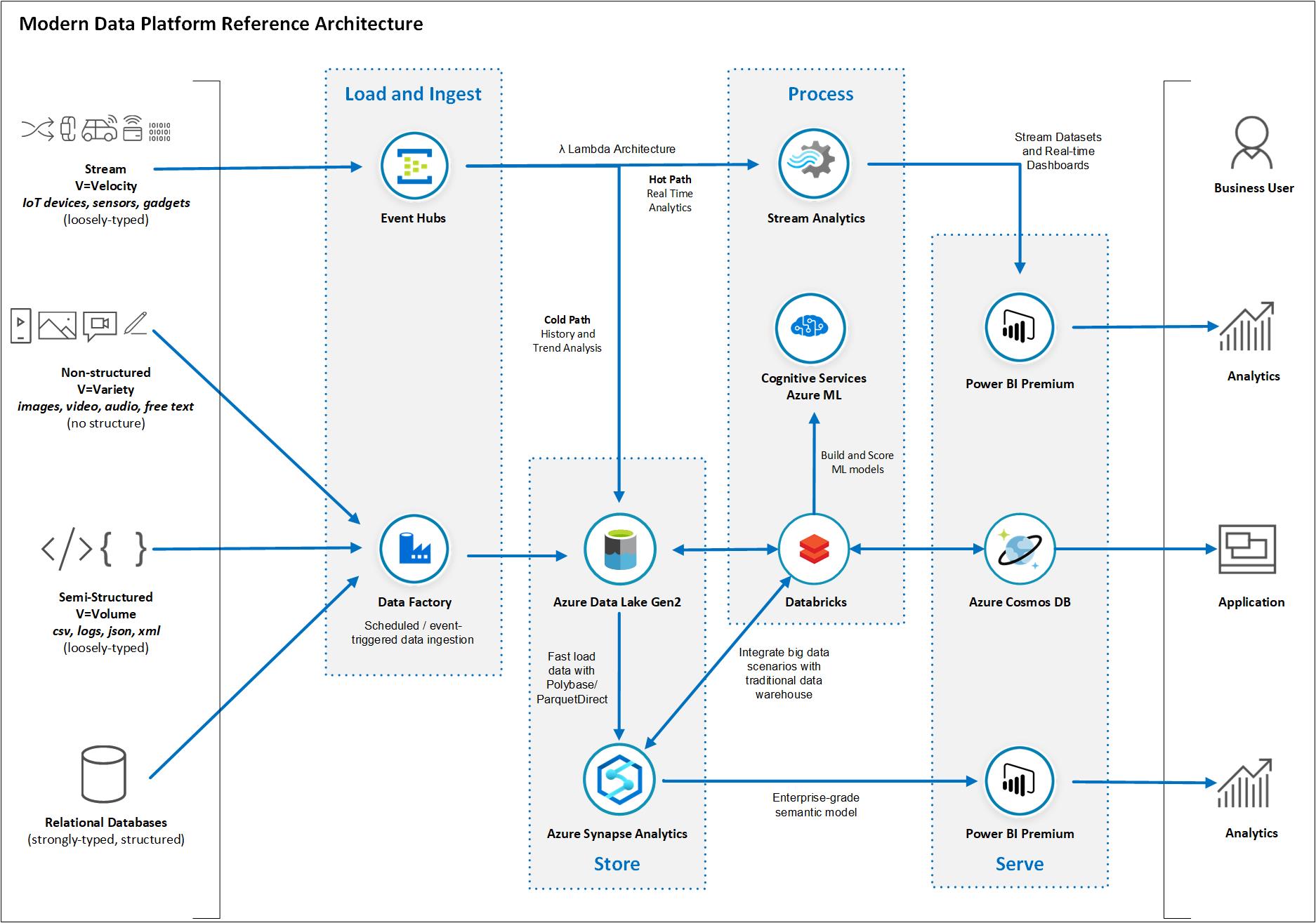

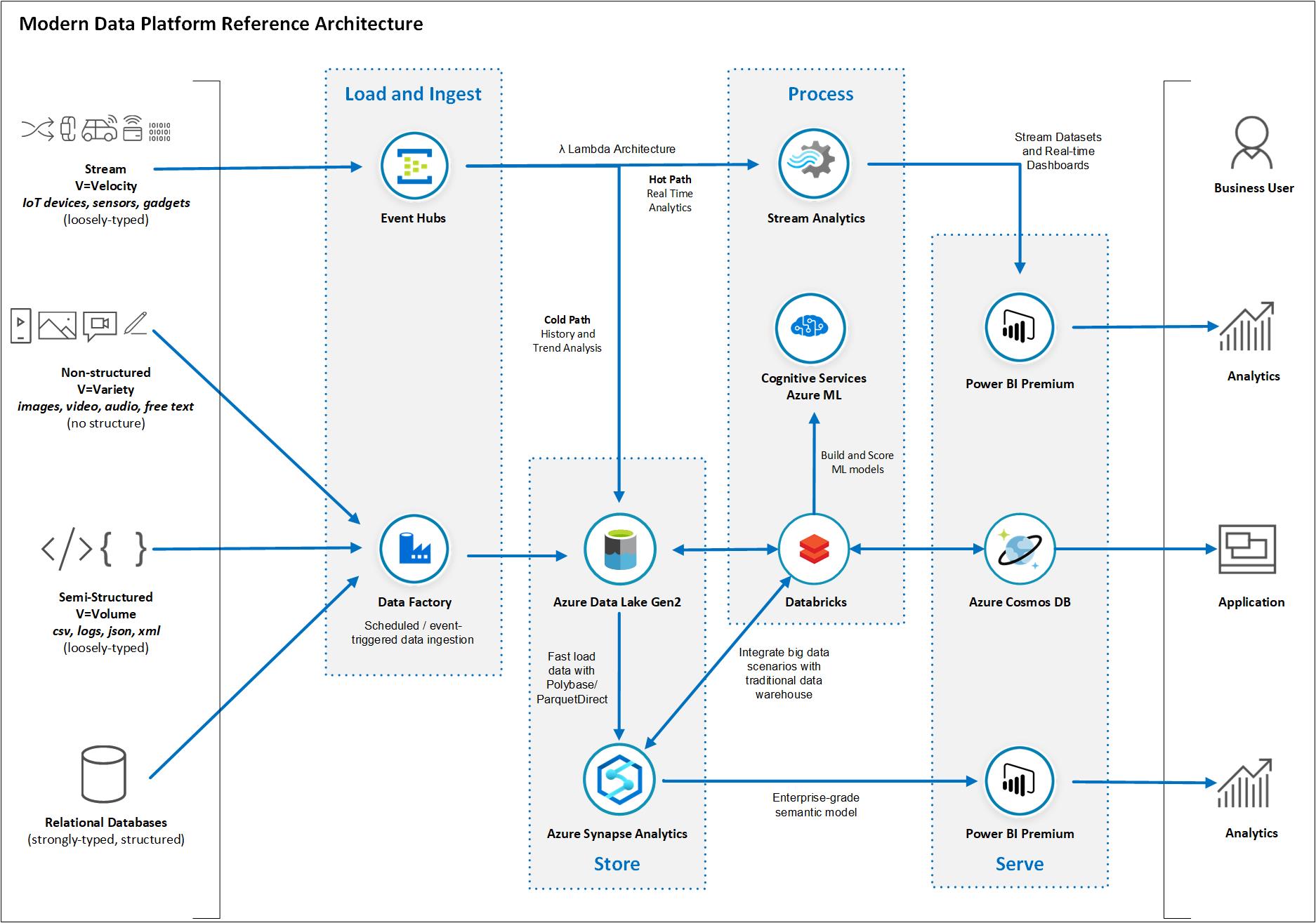

Azure data lake solution architecture.

Because the data sets are so large often a big data solution must process data files using long running batch jobs to filter aggregate and otherwise prepare the data for analysis.

When to use a data lake.

Typical uses for a data lake.

Optimize cost and maximize resource efficiency while remaining compliant with cross cloud architecture.

Data lake storage is designed for fault tolerance infinite scalability and high throughput ingestion of data with varying shapes and sizes.

Cost management billing optimize what you spend on the cloud.

Azure s hybrid connection is a foundational blueprint that is applicable to most azure stack solutions allowing you to establish connectivity for any application that involves communications between the azure public cloud and on premises azure stack components.

This kind of store is often called a data lake.

Data lake processing involves one or more processing engines built with these goals in mind and can operate on data stored in a data lake at scale.

To support our customers as they build data lakes aws offers the data lake solution which is an automated reference implementation that deploys a highly available cost effective data lake architecture on the aws cloud along with a user friendly console for searching and requesting datasets.

Optimise cost and maximise resource efficiency while remaining compliant with cross cloud architecture.

Data lake analytics gives you power to act on.

The qna maker tool makes it easy for the content owners to maintain their knowledge base of.

Usually these jobs involve reading.

Data lake is a key part of cortana intelligence meaning that it works with azure synapse analytics power bi and data factory for a complete cloud big data and advanced analytics platform that helps you with everything from data preparation to doing interactive analytics on large scale datasets.

Azure data lake storage massively scalable.

Azure data lake storage massively scalable.

Options for implementing this storage include azure data lake store or blob containers in azure storage.

Because the data sets are so large often a big data solution must process data files using long running batch jobs to filter aggregate and otherwise prepare the data for.

Data lake is a key part of cortana intelligence meaning that it works with azure synapse analytics power bi and data factory for a complete cloud big data and advanced analytics platform that helps you with everything from data preparation to doing interactive analytics on large scale datasets.